시간 여행(Time travel)

복습

우리는 Human-in-the-Loop의 동기에 대해 논의했습니다:

(1) 승인 - 에이전트를 중단하고, 사용자에게 상태를 표시하며, 사용자가 작업을 승인할 수 있도록 할 수 있습니다

(2) 디버깅 - 그래프를 되감아 문제를 재현하거나 회피할 수 있습니다

(3) 편집 - 상태를 수정할 수 있습니다

중단점이 특정 노드에서 그래프를 중지하거나 그래프가 동적으로 스스로 중단하도록 할 수 있는 방법을 보여주었습니다.

그런 다음 사람의 승인을 받아 진행하거나 사람의 피드백으로 그래프 상태를 직접 편집하는 방법을 보여주었습니다.

목표

이제 LangGraph가 과거 상태를 보고, 재생하고, 심지어 분기하여 디버깅을 지원하는 방법을 보여드리겠습니다.

우리는 이것을 시간 여행(time travel)이라고 부릅니다.

%%capture --no-stderr

%pip install --quiet -U langgraph langchain_openai langgraph_sdkfrom dotenv import load_dotenv

load_dotenv("../.env", override=True)True

import os

import getpass

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("OPENAI_API_KEY")에이전트를 구축해봅시다.

from langchain_openai import ChatOpenAI

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

# This will be a tool

def add(a: int, b: int) -> int:

"""Adds a and b.

Args:

a: first int

b: second int

"""

return a + b

def divide(a: int, b: int) -> float:

"""Divide a by b.

Args:

a: first int

b: second int

"""

return a / b

tools = [add, multiply, divide]

llm = ChatOpenAI(model="gpt-4o")

llm_with_tools = llm.bind_tools(tools)from IPython.display import Image, display

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import MessagesState

from langgraph.graph import START, END, StateGraph

from langgraph.prebuilt import tools_condition, ToolNode

from langchain_core.messages import AIMessage, HumanMessage, SystemMessage

# System message

sys_msg = SystemMessage(

content="당신은 입력 세트에 대한 산술 연산을 수행하는 유용한 어시스턴트입니다."

)

# Node

def assistant(state: MessagesState):

response = llm_with_tools.invoke([sys_msg] + state["messages"])

return {"messages": [response]}

# Graph

builder = StateGraph(MessagesState)

builder.add_node("assistant", assistant)

builder.add_node("tools", ToolNode(tools))

builder.add_edge(START, "assistant")

builder.add_conditional_edges("assistant", tools_condition)

builder.add_edge("tools", "assistant")

memory = MemorySaver()

graph = builder.compile(checkpointer=MemorySaver())

# Show

display(Image(graph.get_graph(xray=True).draw_mermaid_png()))

이전처럼 실행해봅시다.

initial_input = {"messages": HumanMessage(content="2와 3을 곱하세요")}

thread = {"configurable": {"thread_id": "1"}}

for event in graph.stream(initial_input, thread, stream_mode="values"):

event["messages"][-1].pretty_print()================================[1m Human Message [0m=================================

2와 3을 곱하세요

==================================[1m Ai Message [0m==================================

Tool Calls:

multiply (call_4aJg1UguZJz6ExpPoKfIWbyQ)

Call ID: call_4aJg1UguZJz6ExpPoKfIWbyQ

Args:

a: 2

b: 3

=================================[1m Tool Message [0m=================================

Name: multiply

6

==================================[1m Ai Message [0m==================================

2와 3을 곱한 결과는 6입니다.

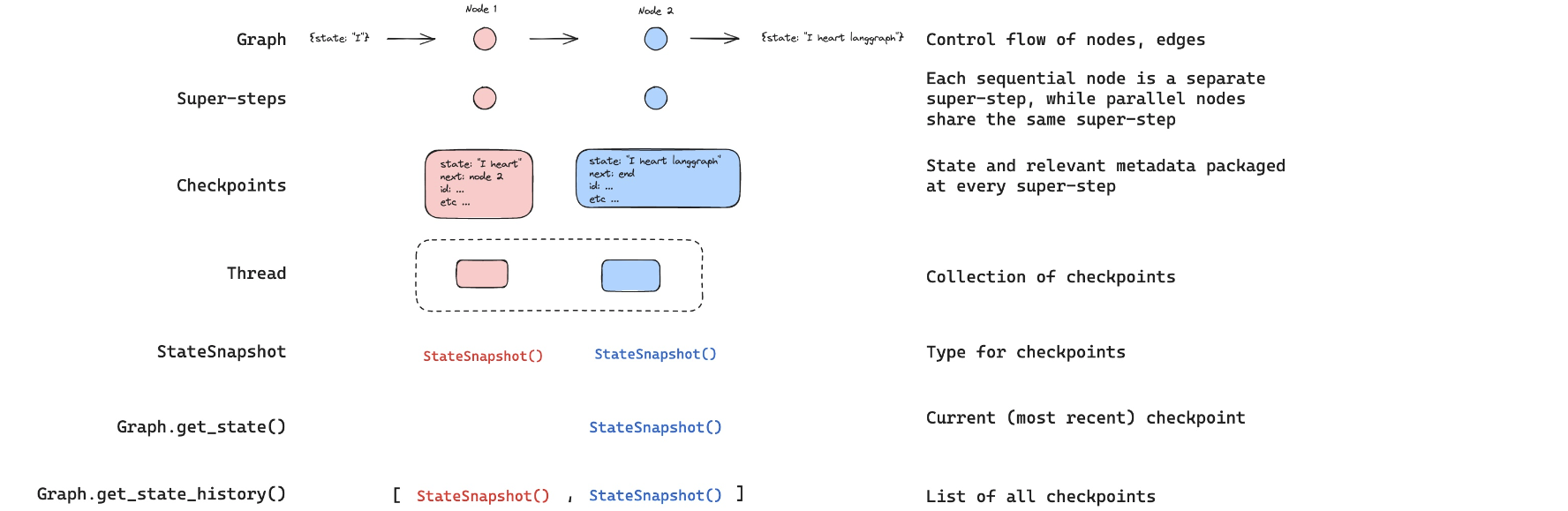

히스토리 탐색

thread_id가 주어지면 get_state를 사용하여 그래프의 현재 상태를 볼 수 있습니다!

graph.get_state({"configurable": {"thread_id": "1"}})StateSnapshot(values={'messages': [HumanMessage(content='2와 3을 곱하세요', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673'), AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 17, 'prompt_tokens': 143, 'total_tokens': 160, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_cbf1785567', 'id': 'chatcmpl-CLjVBdNwV81YrVxtykppccxbINyGk', 'service_tier': 'default', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run--d7964d00-abd9-43f7-90d4-a3af0ca81911-0', tool_calls=[{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'type': 'tool_call'}], usage_metadata={'input_tokens': 143, 'output_tokens': 17, 'total_tokens': 160, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}}), ToolMessage(content='6', name='multiply', id='33c73278-fdf1-43b5-b6ec-41328ba77545', tool_call_id='call_4aJg1UguZJz6ExpPoKfIWbyQ'), AIMessage(content='2와 3을 곱한 결과는 6입니다.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 15, 'prompt_tokens': 168, 'total_tokens': 183, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_f33640a400', 'id': 'chatcmpl-CLjVDpfIHzwkshHV83cZjPAGmDED9', 'service_tier': 'default', 'finish_reason': 'stop', 'logprobs': None}, id='run--8bae31a2-f9bd-4f06-abed-3bcad70e74b9-0', usage_metadata={'input_tokens': 168, 'output_tokens': 15, 'total_tokens': 183, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})]}, next=(), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-5192-6c3c-8003-15c4673e10ad'}}, metadata={'source': 'loop', 'step': 3, 'parents': {}}, created_at='2025-10-01T05:17:15.995628+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-3b31-6204-8002-f80c358e42b7'}}, tasks=(), interrupts=())

에이전트의 상태 히스토리도 탐색할 수 있습니다.

get_state_history를 사용하면 이전의 모든 단계에서의 상태를 가져올 수 있습니다.

all_states = [s for s in graph.get_state_history(thread)]len(all_states)5

all_states[StateSnapshot(values={'messages': [HumanMessage(content='2와 3을 곱하세요', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673'), AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 17, 'prompt_tokens': 143, 'total_tokens': 160, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_cbf1785567', 'id': 'chatcmpl-CLjVBdNwV81YrVxtykppccxbINyGk', 'service_tier': 'default', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run--d7964d00-abd9-43f7-90d4-a3af0ca81911-0', tool_calls=[{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'type': 'tool_call'}], usage_metadata={'input_tokens': 143, 'output_tokens': 17, 'total_tokens': 160, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}}), ToolMessage(content='6', name='multiply', id='33c73278-fdf1-43b5-b6ec-41328ba77545', tool_call_id='call_4aJg1UguZJz6ExpPoKfIWbyQ'), AIMessage(content='2와 3을 곱한 결과는 6입니다.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 15, 'prompt_tokens': 168, 'total_tokens': 183, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_f33640a400', 'id': 'chatcmpl-CLjVDpfIHzwkshHV83cZjPAGmDED9', 'service_tier': 'default', 'finish_reason': 'stop', 'logprobs': None}, id='run--8bae31a2-f9bd-4f06-abed-3bcad70e74b9-0', usage_metadata={'input_tokens': 168, 'output_tokens': 15, 'total_tokens': 183, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})]}, next=(), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-5192-6c3c-8003-15c4673e10ad'}}, metadata={'source': 'loop', 'step': 3, 'parents': {}}, created_at='2025-10-01T05:17:15.995628+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-3b31-6204-8002-f80c358e42b7'}}, tasks=(), interrupts=()),

StateSnapshot(values={'messages': [HumanMessage(content='2와 3을 곱하세요', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673'), AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 17, 'prompt_tokens': 143, 'total_tokens': 160, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_cbf1785567', 'id': 'chatcmpl-CLjVBdNwV81YrVxtykppccxbINyGk', 'service_tier': 'default', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run--d7964d00-abd9-43f7-90d4-a3af0ca81911-0', tool_calls=[{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'type': 'tool_call'}], usage_metadata={'input_tokens': 143, 'output_tokens': 17, 'total_tokens': 160, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}}), ToolMessage(content='6', name='multiply', id='33c73278-fdf1-43b5-b6ec-41328ba77545', tool_call_id='call_4aJg1UguZJz6ExpPoKfIWbyQ')]}, next=('assistant',), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-3b31-6204-8002-f80c358e42b7'}}, metadata={'source': 'loop', 'step': 2, 'parents': {}}, created_at='2025-10-01T05:17:13.648787+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-3b2d-6d84-8001-c404fb9eb53e'}}, tasks=(PregelTask(id='416a8aa9-909e-d3a2-611c-5e34c2c50d85', name='assistant', path=('__pregel_pull', 'assistant'), error=None, interrupts=(), state=None, result={'messages': [AIMessage(content='2와 3을 곱한 결과는 6입니다.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 15, 'prompt_tokens': 168, 'total_tokens': 183, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_f33640a400', 'id': 'chatcmpl-CLjVDpfIHzwkshHV83cZjPAGmDED9', 'service_tier': 'default', 'finish_reason': 'stop', 'logprobs': None}, id='run--8bae31a2-f9bd-4f06-abed-3bcad70e74b9-0', usage_metadata={'input_tokens': 168, 'output_tokens': 15, 'total_tokens': 183, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})]}),), interrupts=()),

StateSnapshot(values={'messages': [HumanMessage(content='2와 3을 곱하세요', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673'), AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 17, 'prompt_tokens': 143, 'total_tokens': 160, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_cbf1785567', 'id': 'chatcmpl-CLjVBdNwV81YrVxtykppccxbINyGk', 'service_tier': 'default', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run--d7964d00-abd9-43f7-90d4-a3af0ca81911-0', tool_calls=[{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'type': 'tool_call'}], usage_metadata={'input_tokens': 143, 'output_tokens': 17, 'total_tokens': 160, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})]}, next=('tools',), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-3b2d-6d84-8001-c404fb9eb53e'}}, metadata={'source': 'loop', 'step': 1, 'parents': {}}, created_at='2025-10-01T05:17:13.647437+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-2fdd-6880-8000-8aba4d5c508d'}}, tasks=(PregelTask(id='8a3c1888-9c71-3db9-9fd9-6cabc418485b', name='tools', path=('__pregel_pull', 'tools'), error=None, interrupts=(), state=None, result={'messages': [ToolMessage(content='6', name='multiply', id='33c73278-fdf1-43b5-b6ec-41328ba77545', tool_call_id='call_4aJg1UguZJz6ExpPoKfIWbyQ')]}),), interrupts=()),

StateSnapshot(values={'messages': [HumanMessage(content='2와 3을 곱하세요', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673')]}, next=('assistant',), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-2fdd-6880-8000-8aba4d5c508d'}}, metadata={'source': 'loop', 'step': 0, 'parents': {}}, created_at='2025-10-01T05:17:12.461111+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-2fdc-6624-bfff-2641c9a2f85d'}}, tasks=(PregelTask(id='d2f0d45b-ebb0-3c18-1eeb-0e7dc5411ad8', name='assistant', path=('__pregel_pull', 'assistant'), error=None, interrupts=(), state=None, result={'messages': [AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 17, 'prompt_tokens': 143, 'total_tokens': 160, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_cbf1785567', 'id': 'chatcmpl-CLjVBdNwV81YrVxtykppccxbINyGk', 'service_tier': 'default', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run--d7964d00-abd9-43f7-90d4-a3af0ca81911-0', tool_calls=[{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'type': 'tool_call'}], usage_metadata={'input_tokens': 143, 'output_tokens': 17, 'total_tokens': 160, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})]}),), interrupts=()),

StateSnapshot(values={'messages': []}, next=('__start__',), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-2fdc-6624-bfff-2641c9a2f85d'}}, metadata={'source': 'input', 'step': -1, 'parents': {}}, created_at='2025-10-01T05:17:12.460645+00:00', parent_config=None, tasks=(PregelTask(id='43feb9ec-bd9c-2399-9f4f-0138f198a6ac', name='__start__', path=('__pregel_pull', '__start__'), error=None, interrupts=(), state=None, result={'messages': HumanMessage(content='2와 3을 곱하세요', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673')}),), interrupts=())]

첫 번째 요소는 get_state에서 얻은 것과 동일한 현재 상태입니다.

all_states[-2]StateSnapshot(values={'messages': [HumanMessage(content='2와 3을 곱하세요', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673')]}, next=('assistant',), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-2fdd-6880-8000-8aba4d5c508d'}}, metadata={'source': 'loop', 'step': 0, 'parents': {}}, created_at='2025-10-01T05:17:12.461111+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-2fdc-6624-bfff-2641c9a2f85d'}}, tasks=(PregelTask(id='d2f0d45b-ebb0-3c18-1eeb-0e7dc5411ad8', name='assistant', path=('__pregel_pull', 'assistant'), error=None, interrupts=(), state=None, result={'messages': [AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 17, 'prompt_tokens': 143, 'total_tokens': 160, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_cbf1785567', 'id': 'chatcmpl-CLjVBdNwV81YrVxtykppccxbINyGk', 'service_tier': 'default', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run--d7964d00-abd9-43f7-90d4-a3af0ca81911-0', tool_calls=[{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'type': 'tool_call'}], usage_metadata={'input_tokens': 143, 'output_tokens': 17, 'total_tokens': 160, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})]}),), interrupts=())

위의 모든 것을 여기서 시각화할 수 있습니다:

Replaying

이전 단계 중 어느 곳에서든 에이전트를 다시 실행할 수 있습니다.

사람의 입력을 받은 단계를 다시 살펴봅시다!

to_replay = all_states[-2]to_replayStateSnapshot(values={'messages': [HumanMessage(content='2와 3을 곱하세요', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673')]}, next=('assistant',), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-2fdd-6880-8000-8aba4d5c508d'}}, metadata={'source': 'loop', 'step': 0, 'parents': {}}, created_at='2025-10-01T05:17:12.461111+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-2fdc-6624-bfff-2641c9a2f85d'}}, tasks=(PregelTask(id='d2f0d45b-ebb0-3c18-1eeb-0e7dc5411ad8', name='assistant', path=('__pregel_pull', 'assistant'), error=None, interrupts=(), state=None, result={'messages': [AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 17, 'prompt_tokens': 143, 'total_tokens': 160, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_cbf1785567', 'id': 'chatcmpl-CLjVBdNwV81YrVxtykppccxbINyGk', 'service_tier': 'default', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run--d7964d00-abd9-43f7-90d4-a3af0ca81911-0', tool_calls=[{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_4aJg1UguZJz6ExpPoKfIWbyQ', 'type': 'tool_call'}], usage_metadata={'input_tokens': 143, 'output_tokens': 17, 'total_tokens': 160, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})]}),), interrupts=())

상태를 확인해봅시다.

to_replay.values{'messages': [HumanMessage(content='Multiply 2 and 3', id='4ee8c440-0e4a-47d7-852f-06e2a6c4f84d')]}

다음에 호출할 노드를 확인할 수 있습니다.

to_replay.next('assistant',)

또한 checkpoint_id와 thread_id를 알려주는 config도 얻을 수 있습니다.

to_replay.config{'configurable': {'thread_id': '1',

'checkpoint_ns': '',

'checkpoint_id': '1ef6a440-a003-6c74-8000-8a2d82b0d126'}}

여기서부터 재생하려면 config를 에이전트에 다시 전달하기만 하면 됩니다!

그래프는 이 체크포인트가 이미 실행되었다는 것을 알고 있습니다.

이 체크포인트부터 재생합니다!

for event in graph.stream(None, to_replay.config, stream_mode="values"):

event["messages"][-1].pretty_print()================================[1m Human Message [0m=================================

2와 3을 곱하세요

==================================[1m Ai Message [0m==================================

Tool Calls:

multiply (call_QVuxfFne1fVNTBHhCTOKHQ6a)

Call ID: call_QVuxfFne1fVNTBHhCTOKHQ6a

Args:

a: 2

b: 3

=================================[1m Tool Message [0m=================================

Name: multiply

6

==================================[1m Ai Message [0m==================================

2와 3을 곱한 결과는 6입니다.

이제 에이전트가 다시 실행된 후의 현재 상태를 볼 수 있습니다.

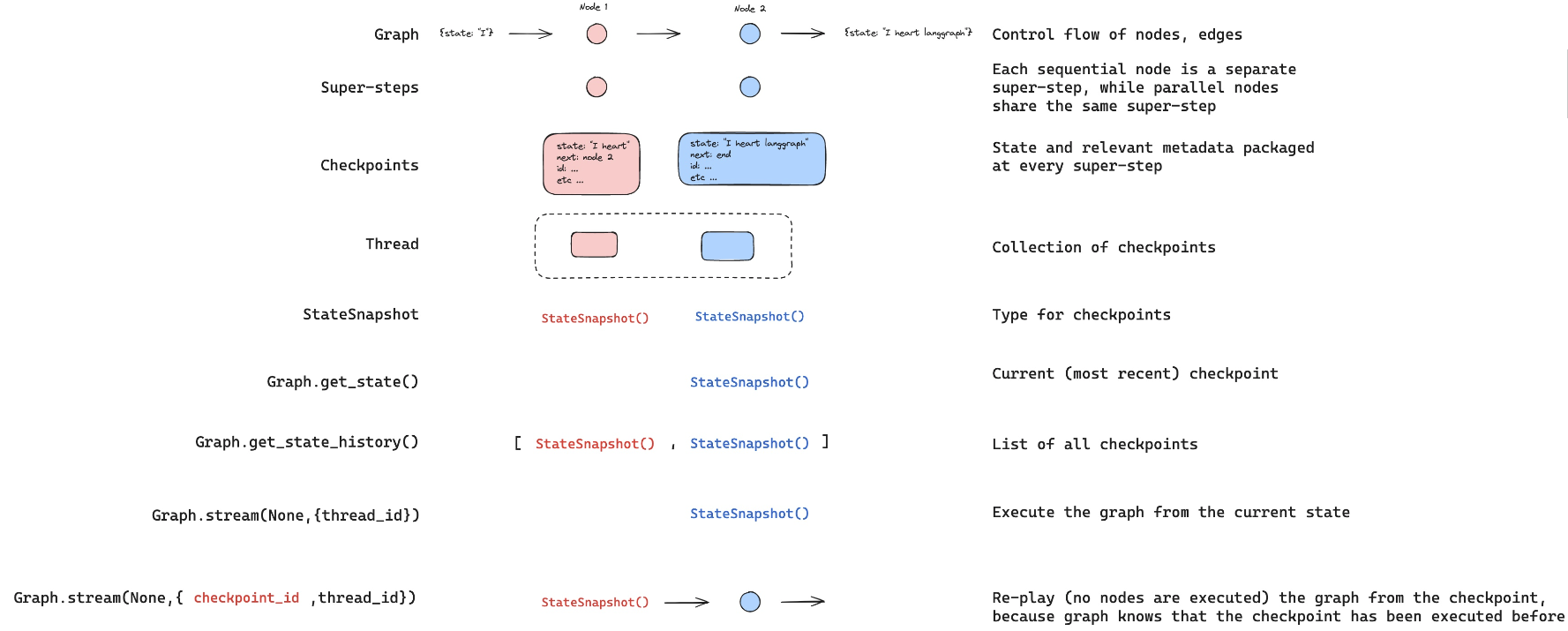

분기(Forking)

같은 단계에서 실행하되 다른 입력으로 실행하고 싶다면 어떻게 할까요?

이것이 분기입니다.

to_fork = all_states[-2]

to_fork.values["messages"][HumanMessage(content='2와 3을 곱하세요', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673')]

다시, config가 있습니다.

to_fork.config{'configurable': {'thread_id': '1',

'checkpoint_ns': '',

'checkpoint_id': '1f09e85e-2fdd-6880-8000-8aba4d5c508d'}}

이 체크포인트에서 상태를 수정해봅시다.

checkpoint_id를 제공하여 update_state를 실행하면 됩니다.

messages의 리듀서가 어떻게 작동하는지 기억하세요:

- 메시지 ID를 제공하지 않으면 추가합니다.

- 상태에 추가하는 대신 메시지를 덮어쓰려면 메시지 ID를 제공합니다!

따라서 메시지를 덮어쓰려면 to_fork.values["messages"].id에 있는 메시지 ID를 제공하기만 하면 됩니다.

fork_config = graph.update_state(

to_fork.config,

{

"messages": [

HumanMessage(

content="5와 3을 곱하세요.",

id=to_fork.values["messages"][0].id,

)

]

},

)fork_config{'configurable': {'thread_id': '1',

'checkpoint_ns': '',

'checkpoint_id': '1f09e869-d3aa-603e-8001-f4d1a9e01058'}}

이것은 새로운 분기된 체크포인트를 생성합니다.

하지만 메타데이터는 보존됩니다!

에이전트의 현재 상태가 우리의 분기로 업데이트된 것을 볼 수 있습니다.

all_states = [state for state in graph.get_state_history(thread)]

all_states[0].values["messages"][HumanMessage(content='5와 3을 곱하세요.', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673')]

graph.get_state({"configurable": {"thread_id": "1"}})StateSnapshot(values={'messages': [HumanMessage(content='5와 3을 곱하세요.', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673')]}, next=('assistant',), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e869-d3aa-603e-8001-f4d1a9e01058'}}, metadata={'source': 'update', 'step': 1, 'parents': {}}, created_at='2025-10-01T05:22:24.915661+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e85e-2fdd-6880-8000-8aba4d5c508d'}}, tasks=(PregelTask(id='d6f509e5-cdd8-5fbd-2395-2f37c1badd6c', name='assistant', path=('__pregel_pull', 'assistant'), error=None, interrupts=(), state=None, result=None),), interrupts=())

이제 스트림할 때, 그래프는 이 체크포인트가 실행된 적이 없다는 것을 알고 있습니다.

따라서 그래프는 단순히 재생하는 대신 실행됩니다.

for event in graph.stream(None, fork_config, stream_mode="values"):

event["messages"][-1].pretty_print()================================[1m Human Message [0m=================================

5와 3을 곱하세요.

==================================[1m Ai Message [0m==================================

Tool Calls:

multiply (call_crtTt2gk1VgLRuqjtwaO5dMz)

Call ID: call_crtTt2gk1VgLRuqjtwaO5dMz

Args:

a: 5

b: 3

=================================[1m Tool Message [0m=================================

Name: multiply

15

==================================[1m Ai Message [0m==================================

5와 3을 곱하면 15입니다.

이제 현재 상태가 에이전트 실행의 끝임을 볼 수 있습니다.

graph.get_state({"configurable": {"thread_id": "1"}})StateSnapshot(values={'messages': [HumanMessage(content='5와 3을 곱하세요.', additional_kwargs={}, response_metadata={}, id='973bbdd9-135a-440e-a1bb-8267d382b673'), AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_crtTt2gk1VgLRuqjtwaO5dMz', 'function': {'arguments': '{"a":5,"b":3}', 'name': 'multiply'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 17, 'prompt_tokens': 144, 'total_tokens': 161, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_cbf1785567', 'id': 'chatcmpl-CLjb6pRmEJCHOCBn2ylYcCXUXRhcE', 'service_tier': 'default', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run--1413bd57-6634-4cac-9f90-b209718317ec-0', tool_calls=[{'name': 'multiply', 'args': {'a': 5, 'b': 3}, 'id': 'call_crtTt2gk1VgLRuqjtwaO5dMz', 'type': 'tool_call'}], usage_metadata={'input_tokens': 144, 'output_tokens': 17, 'total_tokens': 161, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}}), ToolMessage(content='15', name='multiply', id='27c4c66c-93c7-497b-a683-4fa0afe27064', tool_call_id='call_crtTt2gk1VgLRuqjtwaO5dMz'), AIMessage(content='5와 3을 곱하면 15입니다.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 13, 'prompt_tokens': 169, 'total_tokens': 182, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_cbf1785567', 'id': 'chatcmpl-CLjb8or4yMBDLK1iflMYKsYSfY2mK', 'service_tier': 'default', 'finish_reason': 'stop', 'logprobs': None}, id='run--d5ee7c1e-0a4b-4b76-9ce4-52b1995cf2a0-0', usage_metadata={'input_tokens': 169, 'output_tokens': 13, 'total_tokens': 182, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})]}, next=(), config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e86b-fcdf-6ae2-8004-d0ff3907fc09'}}, metadata={'source': 'loop', 'step': 4, 'parents': {}}, created_at='2025-10-01T05:23:22.923877+00:00', parent_config={'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1f09e86b-f0f5-6a60-8003-98c27df023a0'}}, tasks=(), interrupts=())

LangGraph API를 사용한 시간 여행

로컬 개발 서버를 시작하려면 이 모듈의 /studio 디렉토리에서 터미널에 다음 명령어를 실행하세요:

langgraph dev다음과 같은 출력을 볼 수 있습니다:

- 🚀 API: http://127.0.0.1:2024

- 🎨 Studio UI: https://smith.langchain.com/studio/?baseUrl=http://127.0.0.1:2024

- 📚 API Docs: http://127.0.0.1:2024/docs브라우저를 열고 Studio UI로 이동하세요: https://smith.langchain.com/studio/?baseUrl=http://127.0.0.1:2024.

SDK를 통해 연결하고 LangGraph API가 시간 여행을 지원하는 방법을 보여드립니다.

from langgraph_sdk import get_client

client = get_client(url="http://127.0.0.1:2024")Re-playing

각 노드가 호출된 후 그래프 상태에 대한 updates를 스트리밍하여 에이전트를 실행해봅시다.

initial_input = {"messages": HumanMessage(content="Multiply 2 and 3")}

thread = await client.threads.create()

async for chunk in client.runs.stream(

thread["thread_id"],

assistant_id="agent",

input=initial_input,

stream_mode="updates",

):

if chunk.data:

assisant_node = chunk.data.get("assistant", {}).get("messages", [])

tool_node = chunk.data.get("tools", {}).get("messages", [])

if assisant_node:

print("-" * 20 + "Assistant Node" + "-" * 20)

print(assisant_node[-1])

elif tool_node:

print("-" * 20 + "Tools Node" + "-" * 20)

print(tool_node[-1])--------------------Assistant Node--------------------

{'content': '', 'additional_kwargs': {'tool_calls': [{'index': 0, 'id': 'call_SG7XYqDENGq7mwXrnioNLosS', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}]}, 'response_metadata': {'finish_reason': 'tool_calls', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-2c120fc3-3c82-4599-b8ec-24fbee207cad', 'example': False, 'tool_calls': [{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_SG7XYqDENGq7mwXrnioNLosS', 'type': 'tool_call'}], 'invalid_tool_calls': [], 'usage_metadata': None}

--------------------Tools Node--------------------

{'content': '6', 'additional_kwargs': {}, 'response_metadata': {}, 'type': 'tool', 'name': 'multiply', 'id': '3b40d091-58b2-4566-a84c-60af67206307', 'tool_call_id': 'call_SG7XYqDENGq7mwXrnioNLosS', 'artifact': None, 'status': 'success'}

--------------------Assistant Node--------------------

{'content': 'The result of multiplying 2 and 3 is 6.', 'additional_kwargs': {}, 'response_metadata': {'finish_reason': 'stop', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_fde2829a40'}, 'type': 'ai', 'name': None, 'id': 'run-1272d9b0-a0aa-4ff7-8bad-fdffd27c5506', 'example': False, 'tool_calls': [], 'invalid_tool_calls': [], 'usage_metadata': None}

이제 지정된 체크포인트부터 replaying하는 것을 살펴봅시다.

checkpoint_id를 전달하기만 하면 됩니다.

states = await client.threads.get_history(thread["thread_id"])

to_replay = states[-2]

to_replay{'values': {'messages': [{'content': 'Multiply 2 and 3',

'additional_kwargs': {'example': False,

'additional_kwargs': {},

'response_metadata': {}},

'response_metadata': {},

'type': 'human',

'name': None,

'id': 'df98147a-cb3d-4f1a-b7f7-1545c4b6f042',

'example': False}]},

'next': ['assistant'],

'tasks': [{'id': 'e497456f-827a-5027-87bd-b0ccd54aa89a',

'name': 'assistant',

'error': None,

'interrupts': [],

'state': None}],

'metadata': {'step': 0,

'run_id': '1ef6a449-7fbc-6c90-8754-4e6b1b582790',

'source': 'loop',

'writes': None,

'parents': {},

'user_id': '',

'graph_id': 'agent',

'thread_id': '708e1d8f-f7c8-4093-9bb4-999c4237cb4a',

'created_by': 'system',

'assistant_id': 'fe096781-5601-53d2-b2f6-0d3403f7e9ca'},

'created_at': '2024-09-03T22:33:51.380352+00:00',

'checkpoint_id': '1ef6a449-817f-6b55-8000-07c18fbdf7c8',

'parent_checkpoint_id': '1ef6a449-816c-6fd6-bfff-32a56dd2635f'}

재생할 때 모든 노드에서 전체 상태를 보기 위해 stream_mode="values"로 스트리밍해봅시다.

async for chunk in client.runs.stream(

thread["thread_id"],

assistant_id="agent",

input=None,

stream_mode="values",

checkpoint_id=to_replay["checkpoint_id"],

):

print(f"Receiving new event of type: {chunk.event}...")

print(chunk.data)

print("\n\n")Receiving new event of type: metadata...

{'run_id': '1ef6a44a-5806-6bb1-b2ee-92ecfda7f67d'}

Receiving new event of type: values...

{'messages': [{'content': 'Multiply 2 and 3', 'additional_kwargs': {'example': False, 'additional_kwargs': {}, 'response_metadata': {}}, 'response_metadata': {}, 'type': 'human', 'name': None, 'id': 'df98147a-cb3d-4f1a-b7f7-1545c4b6f042', 'example': False}]}

Receiving new event of type: values...

{'messages': [{'content': 'Multiply 2 and 3', 'additional_kwargs': {'example': False, 'additional_kwargs': {}, 'response_metadata': {}}, 'response_metadata': {}, 'type': 'human', 'name': None, 'id': 'df98147a-cb3d-4f1a-b7f7-1545c4b6f042', 'example': False}, {'content': '', 'additional_kwargs': {'tool_calls': [{'index': 0, 'id': 'call_Rn9YQ6iZyYtzrELBz7EfQcs0', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}]}, 'response_metadata': {'finish_reason': 'tool_calls', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-e60d82d7-7743-4f13-bebd-3616a88720a9', 'example': False, 'tool_calls': [{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_Rn9YQ6iZyYtzrELBz7EfQcs0', 'type': 'tool_call'}], 'invalid_tool_calls': [], 'usage_metadata': None}]}

Receiving new event of type: values...

{'messages': [{'content': 'Multiply 2 and 3', 'additional_kwargs': {'example': False, 'additional_kwargs': {}, 'response_metadata': {}}, 'response_metadata': {}, 'type': 'human', 'name': None, 'id': 'df98147a-cb3d-4f1a-b7f7-1545c4b6f042', 'example': False}, {'content': '', 'additional_kwargs': {'tool_calls': [{'index': 0, 'id': 'call_Rn9YQ6iZyYtzrELBz7EfQcs0', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}]}, 'response_metadata': {'finish_reason': 'tool_calls', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-e60d82d7-7743-4f13-bebd-3616a88720a9', 'example': False, 'tool_calls': [{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_Rn9YQ6iZyYtzrELBz7EfQcs0', 'type': 'tool_call'}], 'invalid_tool_calls': [], 'usage_metadata': None}, {'content': '6', 'additional_kwargs': {}, 'response_metadata': {}, 'type': 'tool', 'name': 'multiply', 'id': 'f1be0b83-4565-4aa2-9b9a-cd8874c6a2bc', 'tool_call_id': 'call_Rn9YQ6iZyYtzrELBz7EfQcs0', 'artifact': None, 'status': 'success'}]}

Receiving new event of type: values...

{'messages': [{'content': 'Multiply 2 and 3', 'additional_kwargs': {'example': False, 'additional_kwargs': {}, 'response_metadata': {}}, 'response_metadata': {}, 'type': 'human', 'name': None, 'id': 'df98147a-cb3d-4f1a-b7f7-1545c4b6f042', 'example': False}, {'content': '', 'additional_kwargs': {'tool_calls': [{'index': 0, 'id': 'call_Rn9YQ6iZyYtzrELBz7EfQcs0', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}]}, 'response_metadata': {'finish_reason': 'tool_calls', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-e60d82d7-7743-4f13-bebd-3616a88720a9', 'example': False, 'tool_calls': [{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_Rn9YQ6iZyYtzrELBz7EfQcs0', 'type': 'tool_call'}], 'invalid_tool_calls': [], 'usage_metadata': None}, {'content': '6', 'additional_kwargs': {}, 'response_metadata': {}, 'type': 'tool', 'name': 'multiply', 'id': 'f1be0b83-4565-4aa2-9b9a-cd8874c6a2bc', 'tool_call_id': 'call_Rn9YQ6iZyYtzrELBz7EfQcs0', 'artifact': None, 'status': 'success'}, {'content': 'The result of multiplying 2 and 3 is 6.', 'additional_kwargs': {}, 'response_metadata': {'finish_reason': 'stop', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-55e5847a-d542-4977-84d7-24852e78b0a9', 'example': False, 'tool_calls': [], 'invalid_tool_calls': [], 'usage_metadata': None}]}

우리가 재생하는 노드들에 의해 만들어진 상태에 대한 updates만 스트리밍하는 것으로도 모두 볼 수 있습니다.

async for chunk in client.runs.stream(

thread["thread_id"],

assistant_id="agent",

input=None,

stream_mode="updates",

checkpoint_id=to_replay["checkpoint_id"],

):

if chunk.data:

assisant_node = chunk.data.get("assistant", {}).get("messages", [])

tool_node = chunk.data.get("tools", {}).get("messages", [])

if assisant_node:

print("-" * 20 + "Assistant Node" + "-" * 20)

print(assisant_node[-1])

elif tool_node:

print("-" * 20 + "Tools Node" + "-" * 20)

print(tool_node[-1])--------------------Assistant Node--------------------

{'content': '', 'additional_kwargs': {'tool_calls': [{'index': 0, 'id': 'call_I2qudhMCwcw1GzcFN5q80rjj', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}]}, 'response_metadata': {'finish_reason': 'tool_calls', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-550e75ad-dbbc-4e55-9f00-aa896228914c', 'example': False, 'tool_calls': [{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_I2qudhMCwcw1GzcFN5q80rjj', 'type': 'tool_call'}], 'invalid_tool_calls': [], 'usage_metadata': None}

--------------------Tools Node--------------------

{'content': '6', 'additional_kwargs': {}, 'response_metadata': {}, 'type': 'tool', 'name': 'multiply', 'id': '731b7d4f-780d-4a8b-aec9-0d8b9c58c40a', 'tool_call_id': 'call_I2qudhMCwcw1GzcFN5q80rjj', 'artifact': None, 'status': 'success'}

--------------------Assistant Node--------------------

{'content': 'The result of multiplying 2 and 3 is 6.', 'additional_kwargs': {}, 'response_metadata': {'finish_reason': 'stop', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-2326afa5-eb43-4568-b5ed-424c0a0fa076', 'example': False, 'tool_calls': [], 'invalid_tool_calls': [], 'usage_metadata': None}

분기(Forking)

이제 분기를 살펴봅시다.

위에서 다뤘던 것과 동일한 단계인 사람의 입력을 가져옵시다.

에이전트와 함께 새 스레드를 생성해봅시다.

initial_input = {"messages": HumanMessage(content="Multiply 2 and 3")}

thread = await client.threads.create()

async for chunk in client.runs.stream(

thread["thread_id"],

assistant_id="agent",

input=initial_input,

stream_mode="updates",

):

if chunk.data:

assisant_node = chunk.data.get("assistant", {}).get("messages", [])

tool_node = chunk.data.get("tools", {}).get("messages", [])

if assisant_node:

print("-" * 20 + "Assistant Node" + "-" * 20)

print(assisant_node[-1])

elif tool_node:

print("-" * 20 + "Tools Node" + "-" * 20)

print(tool_node[-1])--------------------Assistant Node--------------------

{'content': '', 'additional_kwargs': {'tool_calls': [{'index': 0, 'id': 'call_HdWoyLELFZGEcqGxFt2fZzek', 'function': {'arguments': '{"a":2,"b":3}', 'name': 'multiply'}, 'type': 'function'}]}, 'response_metadata': {'finish_reason': 'tool_calls', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-cbd081b1-8cef-4ca8-9dd5-aceb134404dc', 'example': False, 'tool_calls': [{'name': 'multiply', 'args': {'a': 2, 'b': 3}, 'id': 'call_HdWoyLELFZGEcqGxFt2fZzek', 'type': 'tool_call'}], 'invalid_tool_calls': [], 'usage_metadata': None}

--------------------Tools Node--------------------

{'content': '6', 'additional_kwargs': {}, 'response_metadata': {}, 'type': 'tool', 'name': 'multiply', 'id': '11dd4a7f-0b6b-44da-b9a4-65f1677c8813', 'tool_call_id': 'call_HdWoyLELFZGEcqGxFt2fZzek', 'artifact': None, 'status': 'success'}

--------------------Assistant Node--------------------

{'content': 'The result of multiplying 2 and 3 is 6.', 'additional_kwargs': {}, 'response_metadata': {'finish_reason': 'stop', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-936cf990-9302-45c7-9051-6ff1e2e9f316', 'example': False, 'tool_calls': [], 'invalid_tool_calls': [], 'usage_metadata': None}

states = await client.threads.get_history(thread["thread_id"])

to_fork = states[-2]

to_fork["values"]{'messages': [{'content': 'Multiply 2 and 3',

'additional_kwargs': {'example': False,

'additional_kwargs': {},

'response_metadata': {}},

'response_metadata': {},

'type': 'human',

'name': None,

'id': '93c18b95-9050-4a52-99b8-9374e98ee5db',

'example': False}]}

to_fork["values"]["messages"][0]["id"]'93c18b95-9050-4a52-99b8-9374e98ee5db'

to_fork["next"]['assistant']

to_fork["checkpoint_id"]'1ef6a44b-27ec-681c-8000-ff7e345aee7e'

상태를 편집해봅시다.

messages의 리듀서가 어떻게 작동하는지 기억하세요:

- 메시지 ID를 제공하지 않으면 추가합니다.

- 상태에 추가하는 대신 메시지를 덮어쓰려면 메시지 ID를 제공합니다!

forked_input = {

"messages": HumanMessage(

content="Multiply 3 and 3", id=to_fork["values"]["messages"][0]["id"]

)

}

forked_config = await client.threads.update_state(

thread["thread_id"], forked_input, checkpoint_id=to_fork["checkpoint_id"]

)forked_config{'configurable': {'thread_id': 'c99502e7-b0d7-473e-8295-1ad60e2b7ed2',

'checkpoint_ns': '',

'checkpoint_id': '1ef6a44b-90dc-68c8-8001-0c36898e0f34'},

'checkpoint_id': '1ef6a44b-90dc-68c8-8001-0c36898e0f34'}

states = await client.threads.get_history(thread["thread_id"])

states[0]{'values': {'messages': [{'content': 'Multiply 3 and 3',

'additional_kwargs': {'additional_kwargs': {},

'response_metadata': {},

'example': False},

'response_metadata': {},

'type': 'human',

'name': None,

'id': '93c18b95-9050-4a52-99b8-9374e98ee5db',

'example': False}]},

'next': ['assistant'],

'tasks': [{'id': 'da5d6548-62ca-5e69-ba70-f6179b2743bd',

'name': 'assistant',

'error': None,

'interrupts': [],

'state': None}],

'metadata': {'step': 1,

'source': 'update',

'writes': {'__start__': {'messages': {'id': '93c18b95-9050-4a52-99b8-9374e98ee5db',

'name': None,

'type': 'human',

'content': 'Multiply 3 and 3',

'example': False,

'additional_kwargs': {},

'response_metadata': {}}}},

'parents': {},

'graph_id': 'agent'},

'created_at': '2024-09-03T22:34:46.678333+00:00',

'checkpoint_id': '1ef6a44b-90dc-68c8-8001-0c36898e0f34',

'parent_checkpoint_id': '1ef6a44b-27ec-681c-8000-ff7e345aee7e'}

다시 실행하려면 checkpoint_id를 전달합니다.

async for chunk in client.runs.stream(

thread["thread_id"],

assistant_id="agent",

input=None,

stream_mode="updates",

checkpoint_id=forked_config["checkpoint_id"],

):

if chunk.data:

assisant_node = chunk.data.get("assistant", {}).get("messages", [])

tool_node = chunk.data.get("tools", {}).get("messages", [])

if assisant_node:

print("-" * 20 + "Assistant Node" + "-" * 20)

print(assisant_node[-1])

elif tool_node:

print("-" * 20 + "Tools Node" + "-" * 20)

print(tool_node[-1])--------------------Assistant Node--------------------

{'content': '', 'additional_kwargs': {'tool_calls': [{'index': 0, 'id': 'call_aodhCt5fWv33qVbO7Nsub9Q3', 'function': {'arguments': '{"a":3,"b":3}', 'name': 'multiply'}, 'type': 'function'}]}, 'response_metadata': {'finish_reason': 'tool_calls', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-e9759422-e537-4b9b-b583-36c688e13b4b', 'example': False, 'tool_calls': [{'name': 'multiply', 'args': {'a': 3, 'b': 3}, 'id': 'call_aodhCt5fWv33qVbO7Nsub9Q3', 'type': 'tool_call'}], 'invalid_tool_calls': [], 'usage_metadata': None}

--------------------Tools Node--------------------

{'content': '9', 'additional_kwargs': {}, 'response_metadata': {}, 'type': 'tool', 'name': 'multiply', 'id': '89787b0b-93de-4c0a-bea8-d2c3845534e1', 'tool_call_id': 'call_aodhCt5fWv33qVbO7Nsub9Q3', 'artifact': None, 'status': 'success'}

--------------------Assistant Node--------------------

{'content': 'The result of multiplying 3 by 3 is 9.', 'additional_kwargs': {}, 'response_metadata': {'finish_reason': 'stop', 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_157b3831f5'}, 'type': 'ai', 'name': None, 'id': 'run-0e16610f-4e8d-46f3-a5df-c2f187fae593', 'example': False, 'tool_calls': [], 'invalid_tool_calls': [], 'usage_metadata': None}

LangGraph Studio

module-1/studio/langgraph.json에 설정된 module-1/studio/agent.py를 사용하는 agent로 Studio UI에서 분기를 살펴봅시다.